Duplicate content poses a significant challenge for search engines and website owners alike. When identical or substantially similar content appears at multiple URLs, search engines struggle to determine which version should be indexed and ranked. This confusion can lead to various SEO issues, including diluted link equity, decreased rankings, and inconsistent search appearances. Understanding why having duplicate content is an issue for SEO is crucial for maintaining strong search engine visibility.

As someone who has managed duplicate content issues across hundreds of websites, I can attest that proper handling of these issues is essential for maintaining a strong online presence. Below, we explore the causes, solutions, and best practices for addressing duplicate content effectively.

Common Sources of Duplicate Content

Duplicate content can originate from several technical and content-based factors. Identifying these sources is the first step in resolving the issue:

Technical URL Variations

Many websites unintentionally generate duplicate content due to variations in URL structures, including:

- Differences between HTTP and HTTPS versions

- WWW vs. non-WWW versions

- Trailing slashes vs. non-trailing slashes

- Case-sensitive URLs (e.g., /Product vs. /product)

CMS and E-commerce Challenges

Content management systems (CMS) often generate multiple URLs for the same content. This is common in:

- Pagination: Multiple pages containing similar or identical content.

- Session IDs and Tracking Parameters: These can create different URLs for the same page.

- Sorting and Filtering in E-commerce: Parameter-based filtering (e.g., color, size) generates multiple URL variations.

- Syndicated Content: Republishing articles on multiple platforms without proper canonicalization.

International Website Issues

Sites targeting different countries or languages may encounter duplicate content across:

- Regional domains or subdirectories (e.g., example.com/us/ vs. example.com/uk/)

- Language translations with minimal content differentiation

- Improper hreflang implementation

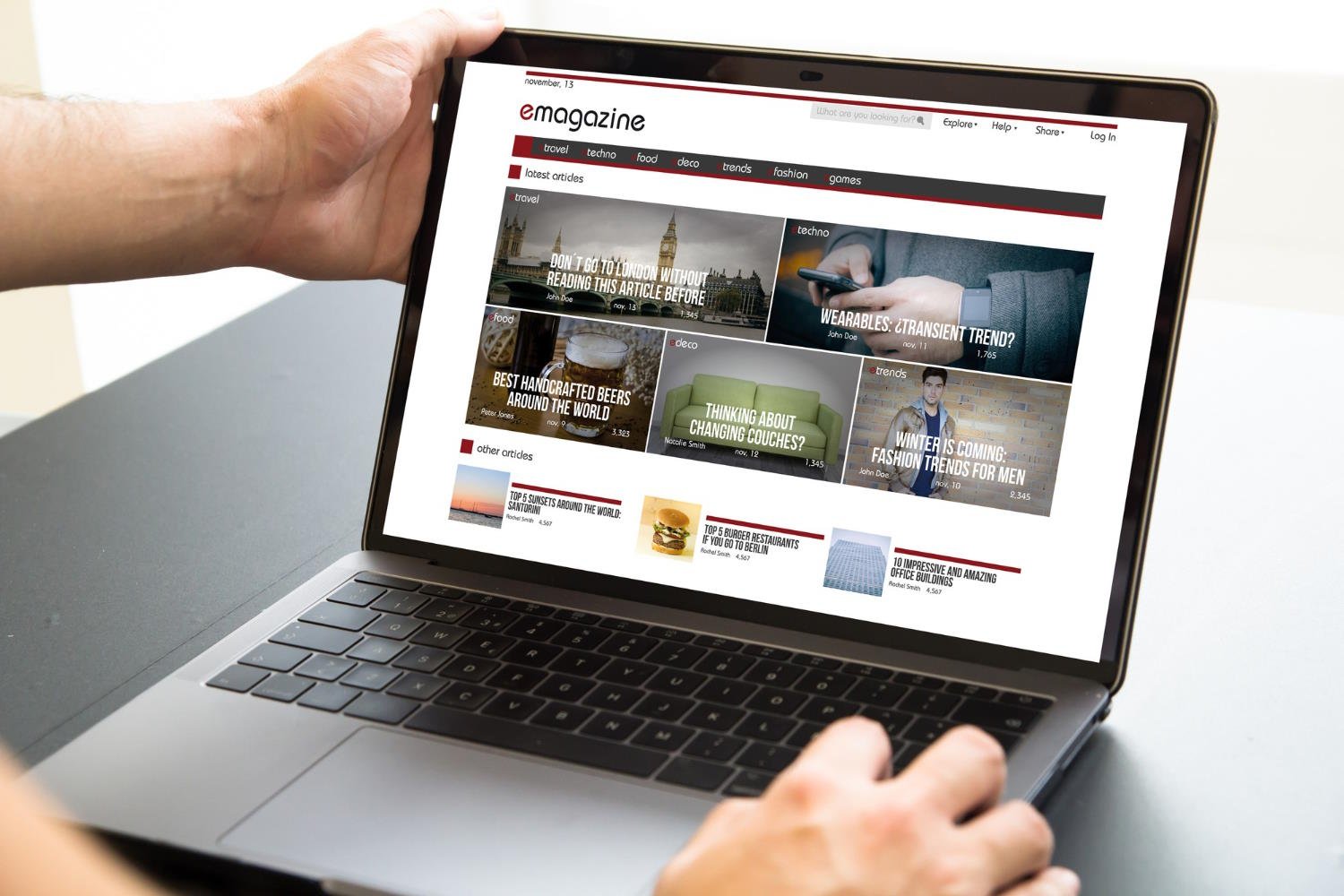

Identifying Duplicate Content Issues

Before fixing duplicate content, it’s essential to search for duplicate content using various tools and methods:

- Google Search Console

- Check the Coverage Report to identify pages flagged as duplicates.

- Crawling Tools (e.g., Screaming Frog, Sitebulb, DeepCrawl)

- Scan your website for duplicate title tags, meta descriptions, and body content.

- Plagiarism Detection Tools

- Use Copyscape or Siteliner to find duplicate content within and outside your website.

- XML Sitemap Review

- Check for duplicate URLs appearing in your sitemap.

- Canonical Tag Auditing

- Ensure canonicalization is correctly implemented across all pages.

How to Fix Duplicate Content Issues

Once you identify duplicate content, use the following techniques to resolve the issue.

1. Implement Canonical Tags

A canonical tag tells search engines which version of a page should be treated as the original. Add the following tag to the <head> section:

<link rel="canonical" href="https://www.example.com/preferred-page/" />

This helps consolidate link equity and prevents duplicate content penalties.

2. Use 301 Redirects

For duplicate content caused by multiple URLs, implement 301 redirects to point duplicate pages to the preferred version. Ensure proper redirection for:

- HTTP → HTTPS

- Non-WWW → WWW (or vice versa)

- Old URLs → New URLs

3. Manage URL Parameters in Google Search Console

Configure parameter handling in Google Search Console to prevent search engines from indexing unnecessary URL variations.

4. Optimize Internal Linking

Ensure all internal links point to the preferred version of a URL rather than linking to multiple variations.

5. Noindex Low-Value Pages

Use the meta robots noindex tag for duplicate pages that do not add unique value, such as:

<meta name="robots" content="noindex, follow">

6. Improve Content Uniqueness

If duplicate content is due to thin or republished content, make substantial changes by:

- Adding unique insights and commentary.

- Expanding product descriptions with original content.

- Restructuring syndicated content to differentiate it from the original.

7. Hreflang for International Websites

If your site serves different regions, implement the hreflang tag correctly:

<link rel="alternate" hreflang="en-us" href="https://example.com/us/" />

<link rel="alternate" hreflang="en-gb" href="https://example.com/uk/" />

This ensures the correct version appears in regional search results.

Monitoring and Maintenance

Regular monitoring prevents duplicate content issues from recurring. Best practices include:

- Weekly checks in Google Search Console’s Coverage Report.

- Monthly site crawls to detect duplicate content.

- Quarterly audits of canonical and redirect implementations.

- Ongoing reviews of CMS-generated URLs.

By maintaining these practices, you can ensure duplicate content does not negatively impact your SEO rankings.

People Also Ask

How do I get rid of duplicate content?

To remove duplicate content, use 301 redirects, implement canonical tags, or set noindex directives for unnecessary pages. For e-commerce sites, optimize product descriptions to avoid duplication across categories.

Is duplicate content bad for SEO?

Yes, duplicate content can dilute link equity, confuse search engines, and lead to ranking penalties. Proper canonicalization and content differentiation are key to avoiding SEO issues.

What to do with duplicate content?

You should either redirect it, canonicalize it, noindex it, or rewrite it to provide unique value. The approach depends on whether the duplicate content is technical, syndicated, or user-generated.

How would you minimize duplicate content?

- Use consistent URL structures and 301 redirects.

- Implement canonical tags on similar pages.

- Optimize internal linking to reference the primary URL.

- Avoid boilerplate content across multiple pages.

- Configure Google Search Console to handle URL parameters correctly.

By following these comprehensive strategies, you can effectively manage duplicate content issues, avoid SEO penalties, and improve your website’s search visibility. Consistent monitoring and proactive optimization will ensure your website remains in good standing with search engines.